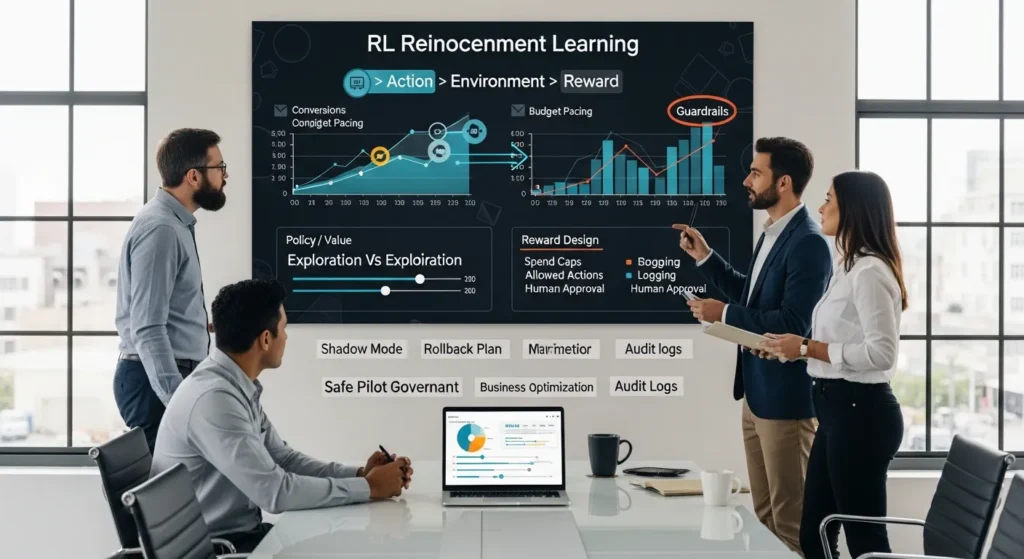

Reinforcement learning sounds like magic until you watch a model “learn” the wrong thing at 2 a.m. We have seen teams wire up an AI decision loop, celebrate early gains, then panic when it starts gaming the reward like a toddler bargaining for candy. Quick answer: reinforcement learning (RL) is a way to train an agent to make a series of decisions by getting rewards and penalties from an environment, and it only works well when you have real feedback loops and strong guardrails.

Key Takeaways

- Reinforcement learning trains an agent to make sequential decisions by interacting with an environment and improving its policy from rewards and penalties over time.

- Use reinforcement learning when decisions repeat frequently, the system reacts to actions, and you can measure a consistent reward signal (e.g., budget pacing, offer sequencing, routing, or support triage).

- Avoid RL for static prediction or one-off content tasks like image classification or generating blog posts, where supervised learning or standard workflows are a better fit.

- Design rewards carefully because the reward function shapes behavior, and poorly chosen metrics (clicks, conversion rate, AOV) can lead the agent to “game” outcomes that hurt the business.

- Balance exploration vs. exploitation with clear risk controls, since trying new actions can cost short-term performance but is required to learn better long-term strategies.

- Run a safe RL pilot with governance-first guardrails—start in shadow mode, log every decision, add human review, cap actions and spend, and expand scope only after beating a baseline.

What Reinforcement Learning Is (And What It Is Not)

Reinforcement learning (RL) is machine learning for decisions over time. An agent takes an action, the world changes, and the agent gets a reward (or penalty). That reward shapes what it tries next.

RL is not “a smarter chatbot.” RL is not a shortcut for writing product descriptions. And RL is not a label-driven training setup where you already know the right answer for each input.

Agent, Environment, State, Action, Reward

Here is the simplest mental model we use when explaining RL to business teams:

- Agent: the decision-maker. A pricing bot, a budget pacing controller, a routing planner.

- Environment: the system the agent acts in. Your ad platform, your WooCommerce store, your warehouse flow.

- State: what the agent can see right now. Spend so far today, inventory levels, session context.

- Action: what the agent can do. Increase bid, show offer A, hold inventory, route driver to stop 3.

- Reward: the score. Revenue, conversions, on-time delivery, fewer refunds, lower handling time.

Cause-and-effect is the whole point: reward design affects agent behavior. If you reward “clicks,” the agent will chase clicks. If you reward “profit after returns,” the agent will learn a different pattern.

How RL Differs From Supervised And Unsupervised Learning

Supervised learning uses labeled examples. You show inputs and correct outputs, then the model learns to match.

Unsupervised learning finds patterns without labels. It groups customers or detects clusters in behavior.

RL learns through interaction. The agent tries actions and learns from the reward signal, even when the reward shows up later. Delayed feedback changes everything: action sequences affect outcomes, not just single predictions.

Sources: Sutton and Barto’s classic RL text remains the clearest overview, and IBM’s summaries help non-research teams get the terms right.

- Reinforcement Learning: An Introduction (2nd ed.), Richard S. Sutton and Andrew G. Barto, 2018, http://incompleteideas.net/book/the-book-2nd.html

- What is reinforcement learning?, IBM, 2023, https://www.ibm.com/topics/reinforcement-learning

- Reinforcement learning, Wikipedia, 2025, https://en.wikipedia.org/wiki/Reinforcement_learning

- Supervised learning, Wikipedia, 2025, https://en.wikipedia.org/wiki/Supervised_learning

When Reinforcement Learning Actually Makes Sense

Teams reach for reinforcement learning when they feel stuck. That instinct can be right, but only in certain shapes of problems.

Right-Fit Problems: Sequential Decisions And Feedback Loops

RL fits when decisions stack up and feedback arrives as a result of the whole chain.

Good fits look like this:

- You act many times per day (or per session), not once per quarter.

- The system reacts to the action. The next state changes.

- You can measure a reward signal consistently.

Examples we see in business:

- Ad budget pacing: spend early affects what is left later. Pacing affects delivery.

- Offer sequencing: which offer you show now affects whether a customer needs a discount later.

- Support triage: routing a ticket to the right queue affects resolution time and future follow-ups.

This is where “Agent -> changes -> Environment” becomes real. Your controller changes spend, spend changes auction exposure, exposure changes conversions, conversions change reward.

Wrong-Fit Problems: Static Prediction And One-Off Content Tasks

RL is a poor fit when you just need a single prediction from a snapshot.

Bad fits:

- Image classification.

- “Write me 50 blog posts.”

- A one-time report.

Those cases usually want supervised learning, retrieval, or a content workflow with human review.

If you are trying to improve website outcomes, we often see more wins from tightening the basics first: page speed, product page structure, tracking hygiene, and clean funnels. RL can sit on top later.

If you want a practical foundation for marketing systems first, you might like our pages on WordPress SEO services and WooCommerce solutions.

Source:

- What is reinforcement learning?, IBM, 2023, https://www.ibm.com/topics/reinforcement-learning

Core Concepts You Need Before You Touch Any Tools

Before you open a notebook or buy a platform, you need the concepts. They will save you from expensive “it learned something, but not what we meant” moments.

Policies, Value Functions, Exploration Vs. Exploitation

A policy is the agent’s strategy. It maps state to action.

A value function estimates long-term reward. It answers: “If we are here now, how good is this situation if we keep acting smart?” Value estimates drive decisions when rewards arrive late.

Then you hit the tension:

- Exploration means trying unknown actions.

- Exploitation means repeating known good actions.

Exploration affects revenue in the short term. Exploitation affects learning in the long term. That tradeoff affects how safe your rollout feels.

Episodes, Discounting, And Reward Design Pitfalls

An episode is one run from start to finish. A customer session can be an episode. A day of budget pacing can be an episode.

Discounting weights future reward versus near-term reward. A discount factor (often written as γ) pushes the agent toward short-term or long-term thinking.

Reward design causes the most trouble. Common pitfalls:

- You reward clicks, and the agent buys cheap traffic that never converts.

- You reward conversion rate, and the agent stops spending on new audiences.

- You reward average order value, and the agent pushes bundles that raise returns.

Reward -> shapes -> policy. If the reward is wrong, the policy will look “smart” while hurting the business.

Source:

- Reinforcement Learning: An Introduction (2nd ed.), Richard S. Sutton and Andrew G. Barto, 2018, http://incompleteideas.net/book/the-book-2nd.html

Common RL Approaches In Plain English

You do not need to memorize algorithms to make good decisions about RL. You do need a plain-English map of the main families.

Model-Free Vs. Model-Based RL

Model-free RL learns by trial and error without building an explicit model of how the environment works. It can work well when simulation is hard.

Model-based RL learns (or uses) a model of the environment, then plans actions by simulating outcomes. It can reduce real-world trial costs when the model is decent.

In business settings, model-based approaches often show up as “simulate scenarios” tools. Model-free approaches show up as “learn a policy from live data” setups.

Q-Learning, Policy Gradients, And Actor-Critic At A High Level

- Q-learning learns the value of taking an action in a state. It asks: “How good is action A when we are in state S?”

- Policy gradients adjust the policy directly. They push the policy toward actions that earned higher reward.

- Actor-critic splits the job. The actor chooses actions. The critic evaluates how good they were.

A practical translation: value-based methods focus on scoring actions, policy-based methods focus on shaping behavior, and actor-critic mixes both.

Source:

- Reinforcement Learning: An Introduction (2nd ed.), Richard S. Sutton and Andrew G. Barto, 2018, http://incompleteideas.net/book/the-book-2nd.html

Reinforcement Learning In Real Business Systems

This is the part people care about: where RL can help without turning your company into a lab.

Marketing And Ecommerce Examples: Budget Pacing, Offers, And Personalization

In ecommerce, RL can fit when you have repeated decisions and measurable outcomes.

Examples:

- Budget pacing: The agent adjusts spend based on time of day and performance. Spend decisions affect delivery. Delivery affects conversions.

- Dynamic offers: The agent chooses which incentive to show based on margin, inventory, and customer behavior.

- Personalization: The agent learns which content block or product set to show, using conversion or profit as reward.

We often connect these loops to WordPress and WooCommerce systems. WordPress -> captures -> events. Events -> feed -> decision logic. Decision logic -> changes -> on-site experience.

If you are building the site layer, start with clean tracking and stable page templates. Then add the decision loop. Our WordPress ecommerce development engagements usually follow that order because it prevents “model drift” caused by messy analytics.

Operations Examples: Inventory, Routing, Scheduling, And Support Triage

Operations teams have natural RL-shaped problems:

- Inventory: reorder decisions now affect stockouts later.

- Routing: a route choice now affects fuel and on-time delivery later.

- Scheduling: staffing decisions affect wait times and overtime.

- Support triage: the first routing choice affects handle time, escalations, and CSAT.

RL -> improves -> decision sequences when feedback loops stay measurable and consistent.

Source:

- What is reinforcement learning?, IBM, 2023, https://www.ibm.com/topics/reinforcement-learning

How To Run A Safe RL Pilot (Governance First)

We treat RL like a decision engine, not a demo. That means we map the workflow, then we constrain it.

Define The Workflow: Trigger / Inputs / Job / Outputs / Guardrails

Before you touch any tools, write the workflow like an SOP:

- Trigger: what starts a decision? New session, new cart, hour mark, ticket created.

- Inputs: what data can the agent use? Keep it minimal. Avoid sensitive fields.

- Job: what does the RL system do? Pick action, score action, log action.

- Outputs: what changes in the real system? Offer selection, bid change, routing decision.

- Guardrails: what must never happen?

- Reward bounds and spend caps

- Allowed actions list

- Rollback plan

- Mandatory logging

- Human approval for high-risk actions

Data handling matters here. If you work in legal, healthcare, insurance, or finance, do not paste client or patient data into random tools. Keep humans in the loop.

Start In Shadow Mode, Add Human Review, Then Gradually Expand

This is the safest way to start:

- Run in shadow mode: the agent makes recommendations, but it does not act. You log everything.

- Compare against baseline: you measure outcomes against your current rules.

- Add human review: a marketer, ops lead, or compliance owner approves actions.

- Expand scope slowly: small budgets, narrow segments, limited hours.

Shadow mode -> reduces -> business risk. Logging -> supports -> audits. Human review -> prevents -> bad reward hacks.

If you want this inside WordPress, we often build a thin “brain layer” between triggers and actions using webhooks and a small custom plugin. WordPress hooks like save_post or WooCommerce order events can trigger decisions, while logs land in a safe datastore. If you need ongoing help, our website maintenance services can cover monitoring and rollback readiness.

Source:

- Reinforcement Learning: An Introduction (2nd ed.), Richard S. Sutton and Andrew G. Barto, 2018, http://incompleteideas.net/book/the-book-2nd.html

Conclusion

Reinforcement learning pays off when your business has repeat decisions, real feedback, and the discipline to constrain the loop. If you only need better copy or a prettier site, you do not need RL. If you need a system that learns what to do next, RL can fit, but only with reward design, logging, and human oversight.

If you want to explore RL without betting the quarter on it, start with one pilot: one decision, one reward, one set of guardrails. Then let the data earn the right to expand.

Frequently Asked Questions About Reinforcement Learning

What is reinforcement learning (RL) in machine learning?

Reinforcement learning (RL) trains an agent to make a sequence of decisions by interacting with an environment and receiving rewards or penalties. Over time, the agent learns a policy (strategy) that maximizes long-term reward—especially useful when feedback is delayed and actions change future outcomes.

How does reinforcement learning differ from supervised and unsupervised learning?

Supervised learning learns from labeled input-output examples, and unsupervised learning finds patterns without labels. Reinforcement learning learns through interaction: the agent takes actions, observes outcomes, and updates behavior from reward signals. This makes RL ideal for sequential decisions where cause-and-effect unfolds over time.

When does reinforcement learning actually make sense for business use cases?

Reinforcement learning makes sense when decisions repeat frequently, actions change the next state, and you can measure rewards consistently. Strong fits include ad budget pacing, offer sequencing, personalization, routing, scheduling, inventory decisions, and support triage—any workflow where today’s choice affects tomorrow’s options and results.

Why does reward design matter so much in reinforcement learning (RL)?

In reinforcement learning, the reward function defines “success,” so the agent will optimize whatever you measure—even if it’s the wrong thing. Rewarding clicks can drive low-quality traffic; rewarding conversion rate can reduce exploration; rewarding average order value can increase returns. Poor rewards produce “smart” policies that harm the business.

What’s the difference between model-free and model-based reinforcement learning?

Model-free reinforcement learning learns by trial and error without explicitly modeling how the environment works, which can help when simulation is difficult. Model-based RL uses or learns a model of the environment to simulate outcomes and plan actions, often reducing costly real-world experimentation when the model is accurate enough.

How do you run a safe reinforcement learning pilot without risking production systems?

Start with governance: define triggers, inputs, outputs, and guardrails like spend caps, allowed actions, rollback plans, and mandatory logging. Run reinforcement learning in shadow mode first so it only recommends actions. Then compare to a baseline, add human approval, and expand scope gradually with tight monitoring.

Some of the links shared in this post are affiliate links. If you click on the link & make any purchase, we will receive an affiliate commission at no extra cost of you.

We improve our products and advertising by using Microsoft Clarity to see how you use our website. By using our site, you agree that we and Microsoft can collect and use this data. Our privacy policy has more details.